Finger representations

Example of a fit of fixed and component pcm-models to data from M1. The models and data is taken from Ejaz et al. (2015). Hand usage predicts the structure of representations in sensorimotor cortex, Nature Neuroscience.

We will fit the following 5 models

null: G=np.eye, all finger patterns are equally far away from each other, Note that in many situations the no-information null model, G = np.zeros, maybe more appropriate

Muscle: Fixed model with G = covariance of muscle activities

Natural: Fixed model with G = covariance of natural movements

Muscle+nat: Combination model of muscle and natural covariance

Noiseceil: Noise ceiling model

[1]:

# Import necessary libraries

import PcmPy as pcm

import numpy as np

import pickle

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

Read in the activity Data (Data), condition vector (cond_vec), partition vector (part_vec), and model matrices for Muscle and Natural stats Models (M):

[2]:

f = open('data_demo_finger7T.p','rb')

Data,cond_vec,part_vec,modelM = pickle.load(f)

f.close()

Now we are build a list of datasets (one per subject) from the Data and condition vectors

[3]:

Y = list()

for i in range(len(Data)):

obs_des = {'cond_vec': cond_vec[i],

'part_vec': part_vec[i]}

Y.append(pcm.dataset.Dataset(Data[i],obs_descriptors = obs_des))

Inspect the data

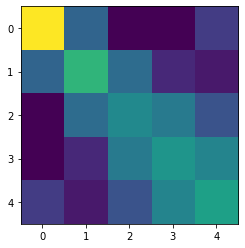

Before fitting the models, it is very useful to first visualize the different data sets to see if there are outliers. One powerful way is to estimate a cross-validated estimate of the second moment matrix. This matrix is just another form of representing a cross-validated representational dissimilarity matrix (RDM).

[16]:

# Estimate and plot the second moment matrices across all data sets

N=len(Y)

G_hat = np.zeros((N,5,5))

for i in range(N):

G_hat[i,:,:],_ = pcm.est_G_crossval(Y[i].measurements,

Y[i].obs_descriptors['cond_vec'],

Y[i].obs_descriptors['part_vec'],

X=pcm.matrix.indicator(Y[i].obs_descriptors['part_vec']))

[5]:

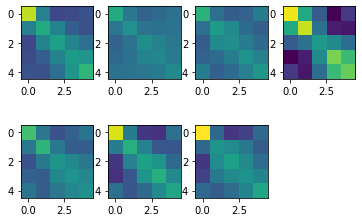

# show all second moment matrices

vmin = G_hat.min()

vmax = G_hat.max()

for i in range(N):

plt.subplot(2,4,i+1)

plt.imshow(G_hat[i,:,:],vmin=vmin,vmax=vmax)

Nice - up to a scaling factor (Subject 4 has especially high signal-to-noise) all seven subjects have a very similar structure of the reresentation of fingers in M1.

If you are more used to looking in representational dissimilarity matrices (RDMs), you can also transform the second momement matrix into this (see Diedrichsen & Kriegeskorte,2017)

[17]:

from scipy.spatial.distance import squareform

C = pcm.pairwise_contrast(np.arange(5))

RDM = squareform(np.diag(C @ G_hat[0,:,:]@C.T))

plt.imshow(RDM)

[17]:

<matplotlib.image.AxesImage at 0x1269684f0>

Build the models

Now we are building a list of models, using a list of second moment matrices

[18]:

# Make an empty list

M = []

# Null model: All fingers are represented independently.

# For RSA model that would mean that all distances are equivalent

M.append(pcm.FixedModel('null',np.eye(5)))

# Muscle model: Structure is given by covariance structure of EMG signals

M.append(pcm.FixedModel('muscle',modelM[0]))

# Usage model: Structure is given by covariance structure of EMG signals

M.append(pcm.FixedModel('usage',modelM[1]))

# Component model: Linear combination of the muscle and usage model

M.append(pcm.ComponentModel('muscle+usage',[modelM[0],modelM[1]]))

# Free noise ceiling model

M.append(pcm.FreeModel('ceil',5)) # Noise ceiling model

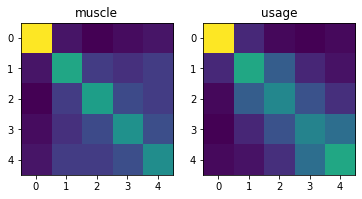

Now let’s look at two underlying second moment matrices - these are pretty similar

[19]:

for i in range(2):

plt.subplot(1,2,i+1)

plt.imshow(M[i+1].G)

plt.title(M[i+1].name)

Model fitting

Now let’s fit the models to individual data set. There are three ways to do this. We can fit the models

to each individual participant with it’s own parameters \(\theta\)

to each all participants together with shared parameters, but with an individual parameter for the signal strength and for group.

in a cross-participant crossvalidated fashion. The models are fit to N-1 subjects and evaluated on the Nth subject.

[20]:

# Do the individual fits - suppress verbose printout

T_in, theta_in = pcm.fit_model_individ(Y,M,fit_scale = True, verbose = False)

[22]:

# Fit the model in to the full group, using a individual scaling parameter for each

T_gr, theta_gr = pcm.fit_model_group(Y, M, fit_scale=True)

Fitting model 0

Fitting model 1

Fitting model 2

Fitting model 3

Fitting model 4

[23]:

# crossvalidated likelihood

T_cv, theta_cv = pcm.fit_model_group_crossval(Y, M, fit_scale=True)

Fitting model 0

Fitting model 1

Fitting model 2

Fitting model 3

Fitting model 4

Inspecting and interpreting the results

The results are returned as a nested data frame with the likelihood, noise and scale parameter for each individuals

[24]:

T_in

[24]:

| variable | likelihood | noise | iterations | scale | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| model | null | muscle | usage | muscle+usage | ceil | null | muscle | usage | muscle+usage | ceil | null | muscle | usage | muscle+usage | ceil | null | muscle | usage | muscle+usage | ceil |

| 0 | -42231.412711 | -41966.470799 | -41786.672956 | -41786.672927 | -41689.860467 | 0.875853 | 0.871286 | 0.868482 | 0.868483 | 0.872297 | 4.0 | 4.0 | 4.0 | 7.0 | 30.0 | 0.109319 | 0.750145 | 0.786771 | 1.000000 | 0.996839 |

| 1 | -34965.171104 | -34923.791342 | -34915.406608 | -34914.959612 | -34889.042762 | 1.070401 | 1.067480 | 1.069075 | 1.068119 | 1.066987 | 4.0 | 4.0 | 4.0 | 16.0 | 21.0 | 0.045008 | 0.324407 | 0.322917 | 0.963006 | 0.998003 |

| 2 | -34767.538097 | -34679.107626 | -34632.643241 | -34632.642946 | -34571.750931 | 1.026408 | 1.021219 | 1.019122 | 1.019123 | 1.023299 | 4.0 | 4.0 | 4.0 | 7.0 | 32.0 | 0.059863 | 0.435483 | 0.463987 | 1.000000 | 0.992453 |

| 3 | -45697.970627 | -45609.052395 | -45448.518276 | -45448.518254 | -45225.784824 | 1.480699 | 1.479592 | 1.474026 | 1.474025 | 1.478701 | 4.0 | 4.0 | 4.0 | 6.0 | 18.0 | 0.173031 | 1.193770 | 1.235628 | 1.000000 | 0.998176 |

| 4 | -31993.363827 | -31866.288313 | -31806.982719 | -31806.982521 | -31707.184233 | 0.808482 | 0.805621 | 0.805774 | 0.805774 | 0.807319 | 4.0 | 4.0 | 4.0 | 6.0 | 32.0 | 0.073935 | 0.516338 | 0.532421 | 1.000000 | 0.999360 |

| 5 | -41817.234010 | -41632.061473 | -41543.438786 | -41543.438769 | -41439.111953 | 1.035696 | 1.031827 | 1.031649 | 1.031648 | 1.034879 | 4.0 | 4.0 | 4.0 | 5.0 | 19.0 | 0.116696 | 0.801114 | 0.828773 | 1.000000 | 0.999325 |

| 6 | -50336.142592 | -50201.799362 | -50173.300358 | -50173.300306 | -50099.140706 | 1.479001 | 1.472401 | 1.474430 | 1.474428 | 1.476145 | 4.0 | 4.0 | 4.0 | 12.0 | 29.0 | 0.101477 | 0.714043 | 0.723969 | 1.000000 | 0.981997 |

The likelihoods are very negative and quite different across participants, which is expected (see documentation). What we need to interpret are the difference is the likelihood relative to a null model. We can visualized these using the model_plot

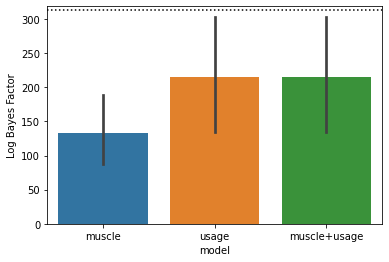

[25]:

ax = pcm.model_plot(T_in.likelihood,

null_model = 'null',

noise_ceiling= 'ceil')

The problem with the noise ceiling is that it is individually fit to each subject. It has much more parameters than the models it is competing against, so it is overfitting. To compare models with different numbers of parameters directly, we need to look at our cross-validated group fits. The group fits can be used as an upper noise ceiling.

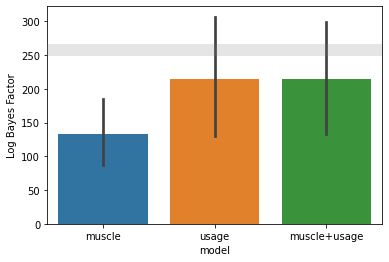

[26]:

ax = pcm.model_plot(T_cv.likelihood,

null_model = 'null',

noise_ceiling= 'ceil',

upper_ceiling = T_gr.likelihood['ceil'])

As you can see, the likelihood for individual, group, and crossvalidated group fits for the fixed models (null, muscle + usage) are all identical, because these models do not have common group parameters - in all cases we are fitting an individual scale and noise parameter.

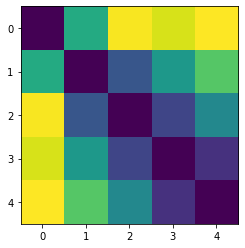

Visualizing the model fit

Finally, it is very useful to visualize the model prediction in comparision to the fitted data. The model parameters are stored in the return argument theta. We can pass these to the Model.predict() function to get the predicted second moment matrix.

[27]:

G,_ = M[4].predict(theta_gr[4][:M[4].n_param])

plt.imshow(G)

[27]:

<matplotlib.image.AxesImage at 0x12633f6a0>